Get ahead

VMware offers training and certification to turbo-charge your progress.

Learn moreEarlier this week, Ollama introduced an exciting new feature: tool support for Large Language Models (LLMs).

Today, we're thrilled to announce that Spring AI (1.0.0-SNAPSHOT) has fully embraced this powerful feature, bringing Ollama's function calling capabilities to the Spring ecosystem.

Ollama's tool support allows models to make decisions about when to call external functions and how to use the returned data. This opens up a world of possibilities, from accessing real-time information to performing complex calculations. Spring AI takes this concept and integrates it seamlessly with the Spring ecosystem, making it incredibly easy for Java developers to leverage this functionality in their applications. Key Features of Spring AI's Ollama Function Calling Support includes:

You first need to run Ollama (0.2.8+) on your local machine. Refer to the official Ollama project README to get started running models on your local machine.

Then pull a tools supporting model such as Llama 3.1, Mistral, Firefunction v2, Command-R +...

A list of supported models can be found under the Tools category on the models page.

ollama run mistral

To start using Ollama function calling with Spring AI, add the following dependency to your project:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-ollama-spring-boot-starter</artifactId>

</dependency>

Refer to the Dependency Management section to add the Spring AI BOM to your build file.

Here's a simple example of how to use Ollama function calling with Spring AI:

@SpringBootApplication

public class OllamaApplication {

public static void main(String[] args) {

SpringApplication.run(OllamaApplication.class, args);

}

@Bean

CommandLineRunner runner(ChatClient.Builder chatClientBuilder) {

return args -> {

var chatClient = chatClientBuilder.build();

var response = chatClient.prompt()

.user("What is the weather in Amsterdam and Paris?")

.functions("weatherFunction") // reference by bean name.

.call()

.content();

System.out.println(response);

};

}

@Bean

@Description("Get the weather in location")

public Function<WeatherRequest, WeatherResponse> weatherFunction() {

return new MockWeatherService();

}

public static class MockWeatherService implements Function<WeatherRequest, WeatherResponse> {

public record WeatherRequest(String location, String unit) {}

public record WeatherResponse(double temp, String unit) {}

@Override

public WeatherResponse apply(WeatherRequest request) {

double temperature = request.location().contains("Amsterdam") ? 20 : 25;

return new WeatherResponse(temperature, request.unit);

}

}

}

In this example, when the model needs weather information, it will automatically call the weatherFunction bean, which can then fetch real-time weather data.

The expected response looks like this: "The weather in Amsterdam is currently 20 degrees Celsius, and the weather in Paris is currently 25 degrees Celsius."

The full example code is available at: https://github.com/tzolov/ollama-tools

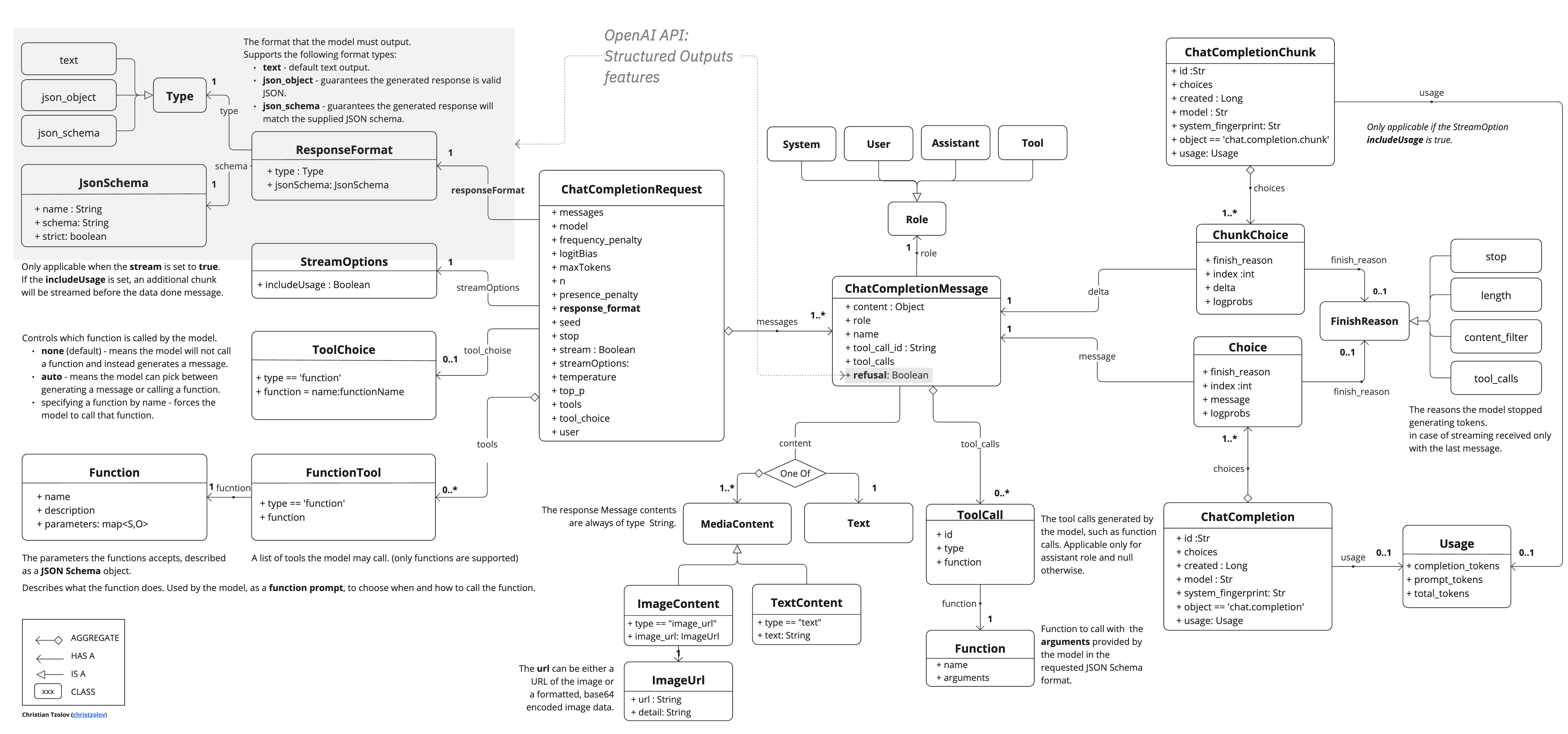

Ollama is OpenAI API compatible and you can use the Spring AI OpenAI client to talk to Ollama and use tools.

For this you need to use the OpenAI client but set the base-url: spring.ai.openai.chat.base-url=http://localhost:11434 and select one of the provided Ollama Tools models: spring.ai.openai.chat.options.model=mistral.

Check the OllamaWithOpenAiChatModelIT.java tests for examples of using Ollama over Spring AI OpenAI.

As stated in the Ollama blog post, currently their API does not support Streaming Tool Calls nor Tool choice.

Once those limitations are resolved, Spring AI is ready to provide support for them as well.

By building on Ollama's innovative tool support and integrating it into the Spring ecosystem, Spring AI has created a powerful new way for Java developers to create AI-enhanced applications. This feature opens up exciting possibilities for creating more dynamic and responsive AI-powered systems that can interact with real-world data and services.

Some benefits of using Spring AI's Ollama Function Calling include:

We encourage you to try out this new feature and let us know how you're using it in your projects. For more detailed information and advanced usage, check out our official documentation.

Happy coding with Spring AI and Ollama!