Get ahead

VMware offers training and certification to turbo-charge your progress.

Learn more

The Model Context Protocol (MCP) standardizes how AI applications interact with external tools and resources. Spring joined the MCP ecosystem early as a key contributor, helping to develop and maintain the official MCP Java SDK that serves as the foundation for Java-based MCP implementations. Building on this contribution, Spring AI has embraced MCP with comprehensive support through dedicated Boot Starters and MCP Java Annotations, making it easier than ever to build sophisticated AI-powered applications that can seamlessly connect to external systems.

This blog introduces core MCP components and demonstrates building both MCP Servers and Clients using Spring AI, showcasing basic and advanced features. The complete source code is available at: MCP Weather Example.

Note: This content applies only to Spring AI

1.1.0-SNAPSHOTor SpringAI 1.1.0-M1+versions.

The Model Context Protocol (MCP) is a standardized protocol that enables AI models to interact with external tools and resources in a structured way. Think of it as a bridge between your AI models and the real world - allowing them to access databases, APIs, file systems, and other external services through a consistent interface.

The Model Context Protocol follows a Client-Server architecture that ensures a clear separation of concerns. The MCP Server exposes specific capabilities (tools, resources, prompts) from third-party services. MCP clients are instantiated by host applications to communicate with particular MCP servers. Each client handles one direct communication with one server.

The Host is the AI application users interact with, while clients are the protocol-level components that enable server connections.

The MCP protocol ensures complete, language-agnostic interoperability between clients and servers. You can have Clients written in Java, Python, or TypeScript communicating with servers in any language and vice versa.

This architecture establishes distinct boundaries and responsibilities between client and server-side development, naturally creating two distinct developer communities:

AI Application/Host Developers

Handle the complexity of orchestrating multiple MCP servers (connected via MCP Clients) and integrating with AI models. AI developers build AI applications that:

MCP Server (Provider) Developers

Focus on exposing specific capabilities (tools, resources, prompts) from third-party services as MCP Servers. Server developers create servers that:

Such separation ensures that Server developers can concentrate on wrapping their domain-specific services without worrying about AI orchestration. At the same time the AI application developers can leverage existing MCP servers without understanding the intricacies of each third-party service.

The division of labor means that a database expert can create an MCP server for PostgreSQL without needing to understand LLM prompting, while an AI application developer can use that PostgreSQL server without knowing SQL internals. The MCP protocol acts as the universal language between them.

Spring AI embraces this architecture with MCP Client and MCP Server Boot Starters. This means Spring developers can participate in both sides of the MCP ecosystem - building AI applications that consume MCP servers and creating MCP servers that expose Spring-based services to the wider AI community.

Shared between the Client and the Server, MCP provides an extensive set of features that enable seamless communication between AI applications and external services:

Important: Tools are owned by the LLM, unlike other MCP features such as prompts and resources. The LLM—not the Host—decides if, when, and in what order to call tools. The Host only controls which tool descriptions are offered to the LLM.

Let's build a Streamable-HTTP MCP Server that provides real-time weather forecast information.

Create a new (mcp-weather-server) Spring Boot application:

@SpringBootApplication

public class McpServerApplication {

public static void main(String[] args) {

SpringApplication.run(McpServerApplication.class, args);

}

}

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-server-webmvc</artifactId>

</dependency>

Find more about the available server dependency options.

In application.properties to enable the Streamable HTTP server transport:

spring.ai.mcp.server.protocol=STREAMABLE

You can start the server with either STREAMABLE, STATELESS or SSE transport.

To enable the STDIO transport you need to set spring.ai.mcp.server.stdio=true.

Leverage the free Weather REST API to build a service that can retrieve weather forecasts by location coordinates.

Add @McpTool and @McpToolParam annotations to register the getTemperature method as an MCP Server Tool:

@Service

public class WeatherService {

public record WeatherResponse(Current current) {

public record Current(LocalDateTime time, int interval, double temperature_2m) {}

}

@McpTool(description = "Get the temperature (in celsius) for a specific location")

public WeatherResponse getTemperature(

@McpToolParam(description = "The location latitude") double latitude,

@McpToolParam(description = "The location longitude") double longitude) {

return RestClient.create()

.get()

.uri("https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m",

latitude, longitude)

.retrieve()

.body(WeatherResponse.class);

}

}

./mvnw clean install -DskipTests

java -jar target/mcp-weather-server-0.0.1-SNAPSHOT.jar

This starts the mcp-weather-server on port 8080.

Once the MCP Weather Server is up and running, you can interact with it using various MCP compliant client applications:

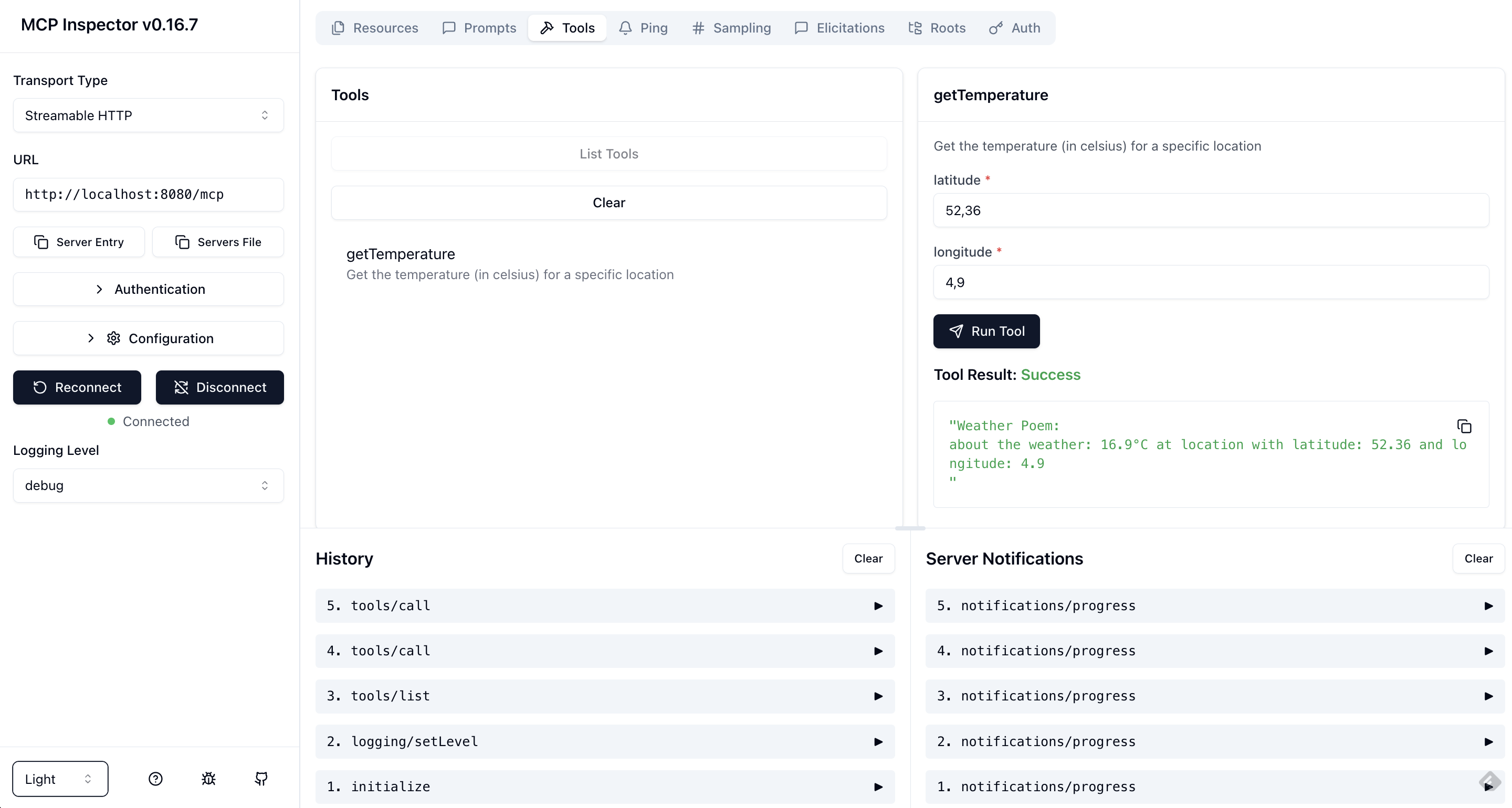

The MCP Inspector is an interactive developer tool for testing and debugging MCP servers. To start the inspector run:

npx @modelcontextprotocol/inspector

In the browser UI, set the Transport Type to Streamable HTTP and the URL to http://localhost:8080/mcp.

Click Connect to establish the connection.

Then list the tools and run the getTemperature.

Use the MCP Java SDK client to programmatically connect to the server:

var client = McpClient.sync(

HttpClientStreamableHttpTransport

.builder("http://localhost:8080").build())

.build();

client.initialize();

CallToolResult weather = client.callTool(

new CallToolRequest("getTemperature",

Map.of("latitude", "47.6062",

"longitude", "-122.3321")));

Other MCP compliant AI Applications/SDKs

Connect your MCP server to popular AI applications:

To integrate with Claude Desktop, using the local STDIO transport, add the following configuration to your Claude Desktop settings:

{

"mcpServers": {

"spring-ai-mcp-weather": {

"command": "java",

"args": [

"-Dspring.ai.mcp.server.stdio=true",

"-Dspring.main.web-application-type=none",

"-Dlogging.pattern.console=",

"-jar",

"/path/to/mcp-weather-server-0.0.1.jar"]

}

}

}

Replace /absolute/path/to/ with the actual path to your built JAR file.

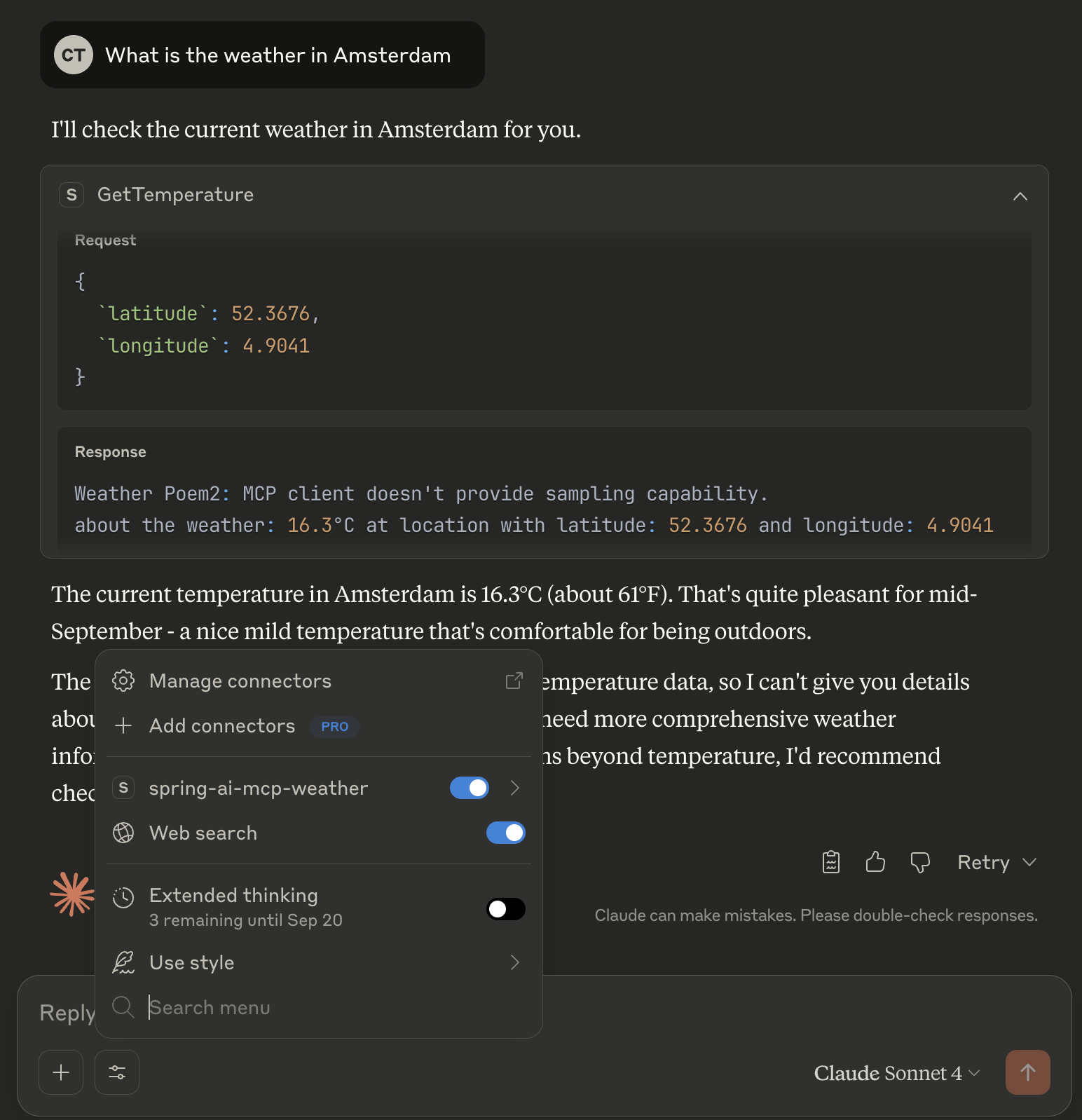

Follow the MCP server installation for Claude Desktop for further guidance. The free version of the Claude Desktop doesn't support Sampling!

Let's extend our MCP Weather Server to demonstrate advanced MCP capabilities including Logging, Progress Tracking, and Sampling. These features enable rich interactions between servers and clients:

In this enhanced version, our weather server will log its operations to the client for transparency, report progress as it fetches and processes weather data and request the client's LLM to generate an epic poem about the weather forecast

Here's the updated server implementation:

@Service

public class WeatherService {

public record WeatherResponse(Current current) {

public record Current(LocalDateTime time, int interval, double temperature_2m) {}

}

@McpTool(description = "Get the temperature (in celsius) for a specific location")

public String getTemperature(

McpSyncServerExchange exchange, // (1)

@McpToolParam(description = "The location latitude") double latitude,

@McpToolParam(description = "The location longitude") double longitude,

@McpProgressToken String progressToken) { // (2)

exchange.loggingNotification(LoggingMessageNotification.builder() // (3)

.level(LoggingLevel.DEBUG)

.data("Call getTemperature Tool with latitude: " + latitude + " and longitude: " + longitude)

.meta(Map.of()) // non null meta as a workaround for bug: ...

.build());

WeatherResponse weatherResponse = RestClient.create()

.get()

.uri("https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m",

latitude, longitude)

.retrieve()

.body(WeatherResponse.class);

String epicPoem = "MCP Client doesn't provide sampling capability.";

if (exchange.getClientCapabilities().sampling() != null) {

// 50% progress

exchange.progressNotification(new ProgressNotification(progressToken, 0.5, 1.0, "Start sampling")); // (4)

String samplingMessage = """

For a weather forecast (temperature is in Celsius): %s.

At location with latitude: %s and longitude: %s.

Please write an epic poem about this forecast using a Shakespearean style.

""".formatted(weatherResponse.current().temperature_2m(), latitude, longitude);

CreateMessageResult samplingResponse = exchange.createMessage(CreateMessageRequest.builder()

.systemPrompt("You are a poet!")

.messages(List.of(new SamplingMessage(Role.USER, new TextContent(samplingMessage))))

.build()); // (5)

epicPoem = ((TextContent) samplingResponse.content()).text();

}

// 100% progress

exchange.progressNotification(new ProgressNotification(progressToken, 1.0, 1.0, "Task completed"));

return """

Weather Poem: %s

about the weather: %s°C at location: (%s, %s)

""".formatted(epicPoem, weatherResponse.current().temperature_2m(), latitude, longitude);

}

}

McpSyncServerExchange - the exchange parameter provides access to server-client communication capabilities. It allows the server to send notifications and make requests back to the client.

@ProgressToken - the progressToken parameter enables progress tracking. The client provides this token, and the server uses it to send progress updates.

Logging Notifications - sends structured log messages to the client for debugging and monitoring purposes.

Progress Updates - reports operation progress (50% in this case) to the client with a descriptive message.

This allows the server to leverage the client's AI capabilities, creating a bidirectional AI interaction pattern.

The enhanced weather service now returns not just weather data, but a creative poem about the forecast, demonstrating the powerful synergy between MCP servers and AI models.

Let's build an AI application that uses an LLM and connects to MCP Servers via MCP Clients.

Create a new Spring Boot project (mcp-weather-client) with the following dependencies:

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-mcp-client</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-starter-model-anthropic</artifactId>

</dependency>

Find about the available dependency options to configure different transport mechanisms.

In application.yml, configure the connection to the MCP Server:

spring:

main:

web-application-type: none

ai:

# Set credentials for your Anthropic API account

anthropic:

api-key: ${ANTHROPIC_API_KEY}

# Connect to the MCP Weather Server using streamable-http client transport

mcp:

client:

streamable-http:

connections:

my-weather-server:

url: http://localhost:8080

Note that the configuration has assigned the my-weather-server name to the server connection.

Create a client application that uses ChatClient connected to an LLM and to the MCP Weather Server:

@SpringBootApplication

public class McpClientApplication {

public static void main(String[] args) {

SpringApplication.run(McpClientApplication.class, args).close(); // (1)

}

@Bean

public ChatClient chatClient(ChatClient.Builder chatClientBuilder) { // (2)

return chatClientBuilder.build();

}

String userPrompt = """

Check the weather in Amsterdam right now and show the creative response!

Please incorporate all creative responses from all LLM providers.

""";

@Bean

public CommandLineRunner predefinedQuestions(ChatClient chatClient, ToolCallbackProvider mcpToolProvider) { // (3)

return args -> System.out.println(

chatClient.prompt(userPrompt) // (4)

.toolContext(Map.of("progressToken", "token-" + new Random().nextInt())) // (5)

.toolCallbacks(mcpToolProvider) // (6)

.call()

.content());

}

}

Application Lifecycle Management - the application starts, executes the weather query, displays the result, and then exits cleanly.

ChatClient Configuration - creates a configured ChatClient bean using Spring AI's auto-configured builder. The builder is automatically populated with:

CommandLineRunner - runs automatically after the application context is fully loaded. It injects the configured ChatClient for AI model interaction and the ToolCallbackProvider which contains all registered MCP tools from connected servers.

AI Prompt - instructs the AI model to get Amsterdam's current weather. The AI model automatically discovers and calls the appropriate MCP tools based on the prompt.

Progress Token - uses the toolContext to pass a unique progressToken to MCP tools annotated with @McpProgressToken parameter.

MCP Tool Integration - this crucial line connects the ChatClient to all available MCP tools:

mcpToolProvider is auto-configured by Spring AI's MCP Client starterspring.ai.mcp.client.*.connections.*)Create a service class to handle MCP notifications and requests from the server. These handlers are the client-side counterparts to the advanced server features we implemented above, enabling bidirectional communication between the MCP Server and Client:

@Service

public class McpClientHandlers {

private static final Logger logger = LoggerFactory.getLogger(McpClientHandlers.class);

private final ChatClient chatClient;

public McpClientHandlers(@Lazy ChatClient chatClient) { // Lazy is needed to avoid circular dependency

this.chatClient = chatClient;

}

@McpProgress(clients = "my-weather-server") // (1)

public void progressHandler(ProgressNotification progressNotification) {

logger.info("MCP PROGRESS: [{}] progress: {} total: {} message: {}",

progressNotification.progressToken(), progressNotification.progress(),

progressNotification.total(), progressNotification.message());

}

@McpLogging(clients = "my-weather-server")

public void loggingHandler(LoggingMessageNotification loggingMessage) {

logger.info("MCP LOGGING: [{}] {}", loggingMessage.level(), loggingMessage.data());

}

@McpSampling(clients = "my-weather-server")

public CreateMessageResult samplingHandler(CreateMessageRequest llmRequest) {

logger.info("MCP SAMPLING: {}", llmRequest);

String llmResponse = chatClient

.prompt()

.system(llmRequest.systemPrompt())

.user(((TextContent) llmRequest.messages().get(0).content()).text())

.call()

.content();

return CreateMessageResult.builder().content(new TextContent(llmResponse)).build();

}

}

Progress Handler - Receives real-time progress updates from the server's long-running operations. Triggered when the server calls exchange.progressNotification(...). For example the weather server sends 50% progress when starting sampling, then 100% when complete. Commonly used to display progress bars, update UI status, or log operation progress.

Logging Handler - Receives structured log messages from the server for debugging and monitoring. Triggered when the server calls exchange.loggingNotification(...). For example the weather server logs "Call getTemperature Tool with latitude: X and longitude: Y". Used to debug server operations, audit trails, or monitoring dashboards.

Sampling Handler - The Most Powerful Feature. It enables the server to request AI-generated content from the client's LLM. Used for bidirectional AI interactions, creative content generation, dynamic responses. Triggered when the server calls exchange.createMessage(...) with sampling capability check. The execution flow looks like this:

Annotation-Based Routing: The clients = "my-weather-server" attribute ensures handlers only process notifications from the specific MCP server connection defined in your configuration: spring.ai.mcp.client.streamable-http.connections.[my-weather-server].url.

If your application connects to multiple MCP servers, use the clients attribute to assign each handler to the corresponding MCP Client:

@McpProgress(clients = {"weather-server", "database-server"}) // Handle progress from multiple servers

public void multiServerProgressHandler(ProgressNotification notification) {

// Handle progress from both servers

}

@McpSampling(clients = "specialized-ai-server") // Handle sampling from specific server

public CreateMessageResult specializedSamplingHandler(CreateMessageRequest request) {

// Handle sampling requests from specialized AI server

}

The @Lazy annotation on ChatClient prevents circular dependency issues that can occur when the ChatClient also depends on MCP components

Bidirectional AI Communication: The sampling handler creates a powerful pattern where:

The server (domain expert) can leverage the client's AI capabilities

The client's LLM generates creative content based on server-provided context

This enables sophisticated AI-to-AI interactions beyond simple tool invocation

This architecture makes the MCP Client a reactive participant in server operations, enabling sophisticated interactions rather than just passive tool consumption.

Connect to multiple MCP servers using different transports. Here's how to add the Brave Search MCP Server for web search alongside your weather server:

spring:

ai:

anthropic:

api-key: ${ANTHROPIC_API_KEY}

mcp:

client:

streamable-http:

connections:

my-weather-server:

url: http://localhost:8080

stdio:

connections:

brave-search:

command: npx

args: ["-y",

"@modelcontextprotocol/server-brave-search"]

It uses the STDIO client transport.

Now your LLM can combine weather data and web search in a single prompt:

String userPrompt = """

Check the weather in Amsterdam and show the creative response!

Please incorporate all creative responses.

Then search online to find publishers for poetry and list top 3.

""";

Make sure that your MCP Weather Server is up and running.

Then build and start your client:

./mvnw clean install -DskipTests

java -jar target/mcp-weather-client-0.0.1-SNAPSHOT.jar

The combination of Spring's proven development model with MCP's standardized protocol creates a powerful foundation for the next generation of AI applications. Whether you're building chatbots, data analysis tools, or development assistants, Spring AI's MCP support provides the building blocks you need.

This introduction covered the essential MCP concepts and demonstrated how to build both MCP Servers and Clients using Spring AI's Boot Starters with basic Tool functionality. However, the MCP ecosystem offers much more sophisticated capabilities that we'll explore in upcoming blog posts:

Java MCP Annotations Deep Dive: Learn how to leverage Spring AI's annotation-based approach for creating more maintainable and declarative MCP implementations, including advanced annotation patterns and best practices.

Beyond Tools - Prompts, Resources & Completions: Discover how to implement the full spectrum of MCP capabilities including shared prompt templates, dynamic resource provisioning, and intelligent autocompletion features that make your MCP servers more user-friendly and powerful.

Authorization support - securing MCP Servers: Secure your MCP Servers with OAuth 2, and ensure only authorized users can access tools, resources and other capabilities. Add authorization support to your MCP Clients, so they can obtain OAuth 2 tokens to authenticate with secure MCP servers.

Ready to get started? Check out the example applications and explore the full potential of AI integration with Spring AI and MCP.

For the latest updates and comprehensive documentation, visit the Spring AI Reference Documentation.