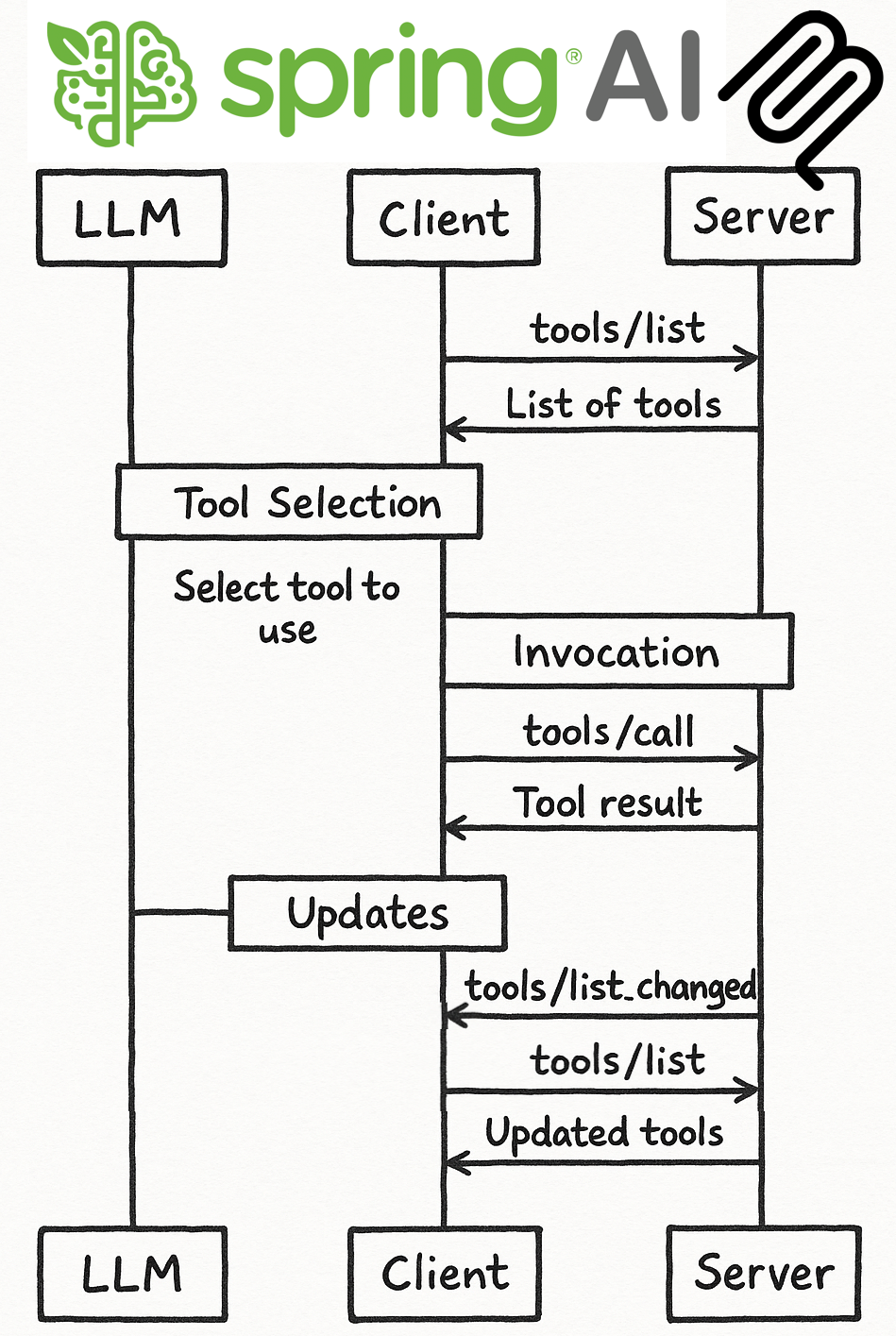

Dynamic Tool Updates in Spring AI's Model Context Protocol

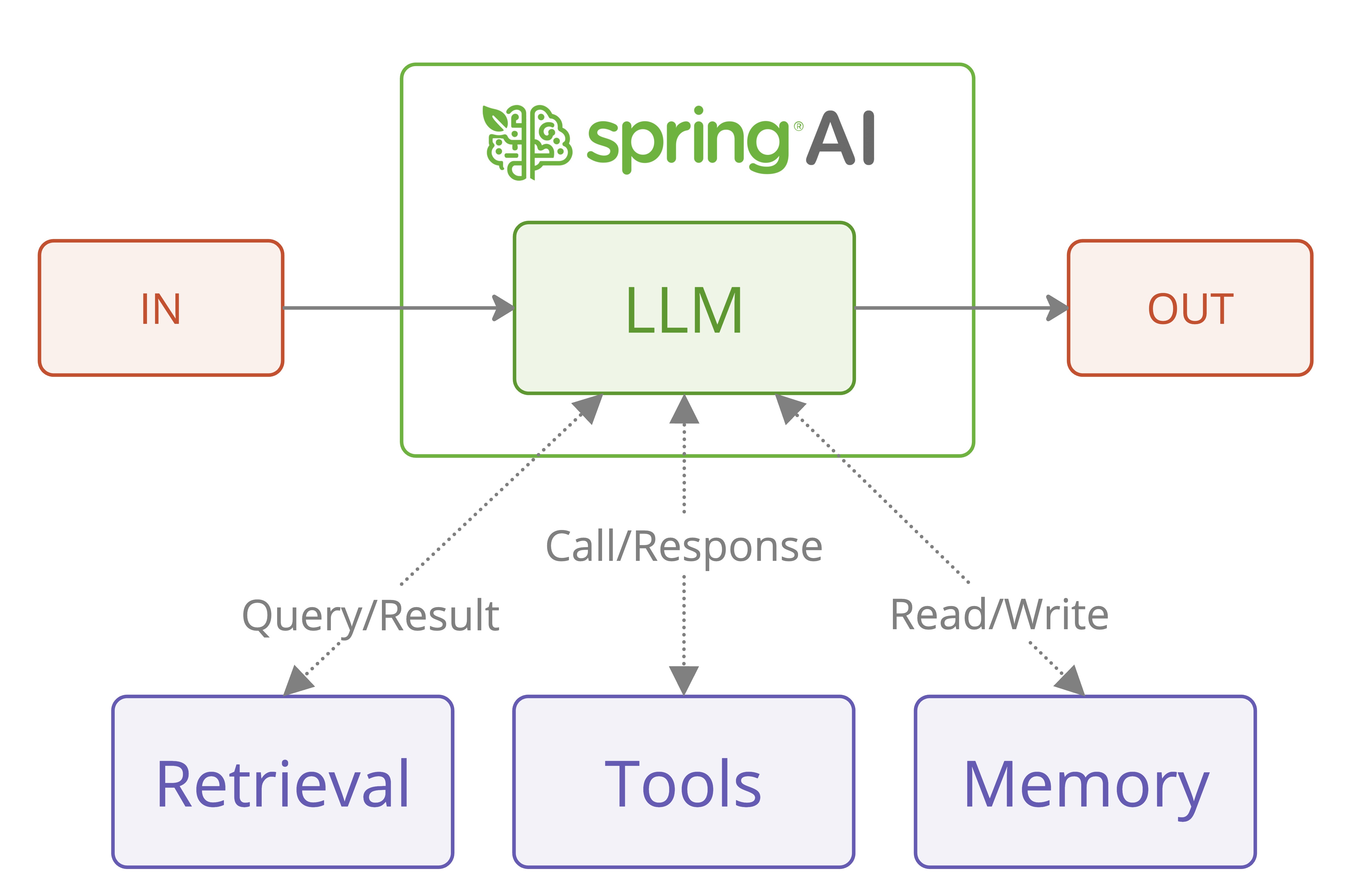

The Model Context Protocol (MCP) is a powerful feature in Spring AI that enables AI models to access external tools and resources through a standardized interface. One interesting capabilities of MCP is its ability to dynamically update available tools at runtime.

This blog post explores how Spring AI implements dynamic tool updates in MCP, providing flexibility and extensibility to AI-powered applications.

The related example code is available here: Dynamic Tool Update Example

Understanding the Model Context Protocol

Before diving into dynamic tool updates, let's understand what MCP is and…