Get ahead

VMware offers training and certification to turbo-charge your progress.

Learn moreOn behalf of the Spring AI engineering team and everyone who contributed to this release, I am very excited to announce the general availability of Spring AI 1.0. We have a great release blog lined up for you.

All the new bits are in maven central. Use the provided bom to import the dependencies.

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.ai</groupId>

<artifactId>spring-ai-bom</artifactId>

<version>1.0.0</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

Checkout the Upgrade Notes for the latest breaking changes and how to upgrade. NOTE: You can automate the upgrade process to 1.0.0-GA using an OpenRewrite recipe. This recipe helps apply many of the necessary code changes for this version. Find the recipe and usage instructions at Arconia Spring AI Migrations.

You can get started creating 1.0 GA apps on the Intialzr website and read our Getting Started section in the reference documentation.

Second are a selection of blogs created for the 1.0 GA release from the many friends and family we have been working with over the past two years, showing how to use Spring AI in various ways:

Additionally, there is a comprehensive article from Josh Long demonstrating how to use Spring AI with Anthropic's Claude, as well as an article from Daniel Garnier-Moiroux in his blog MCP Authorization in practice with Spring AI and OAuth2.

First up, of course, is a new song. Check out the latest track in the Spring AI play list - it will make you happy.

Third, we have a new Spring AI logo! Many thanks to Sergi Almar, the organizer of the Spring IO conference, and designer Jorge Rigabert for creating such a nice logo. The modifications possible with the lowercase letter 'i' are fascinating to see.

Let's take a tour of the Spring AI 1.0 GA feature set.

At the heart of Spring AI is the ChatClient , a portable and easy to use API that is the primary interface for interacting with AI models.

Spring AI’s ChatClient supports invoking 20 AI Models, from Anthropic to ZhiPu. It supports multi-modal inputs and output (when supported by the underlying model) and structured responses - most often in JSON for easier processing of output in your application.

For a detailed comparsion of the feature set for AI models, visit the Chat Models Comparison in our reference docs.

Read our reference docs for more information on ChatClient. You can see it in action in Josh's blog

Creating the right prompts, what you pass to the model, is an important skill. There are several patterns to get the most out of the AI model to get the best results.

You can find example usage of prompt in the reference docs.

Spring AI’s reference documentationa also covers Prompt Engineering techniques with example code based on the comprehensive Prompt Engineering Guide.

However, real-world AI applications go beyond simple request/response interactions with a stateless AI Model API.

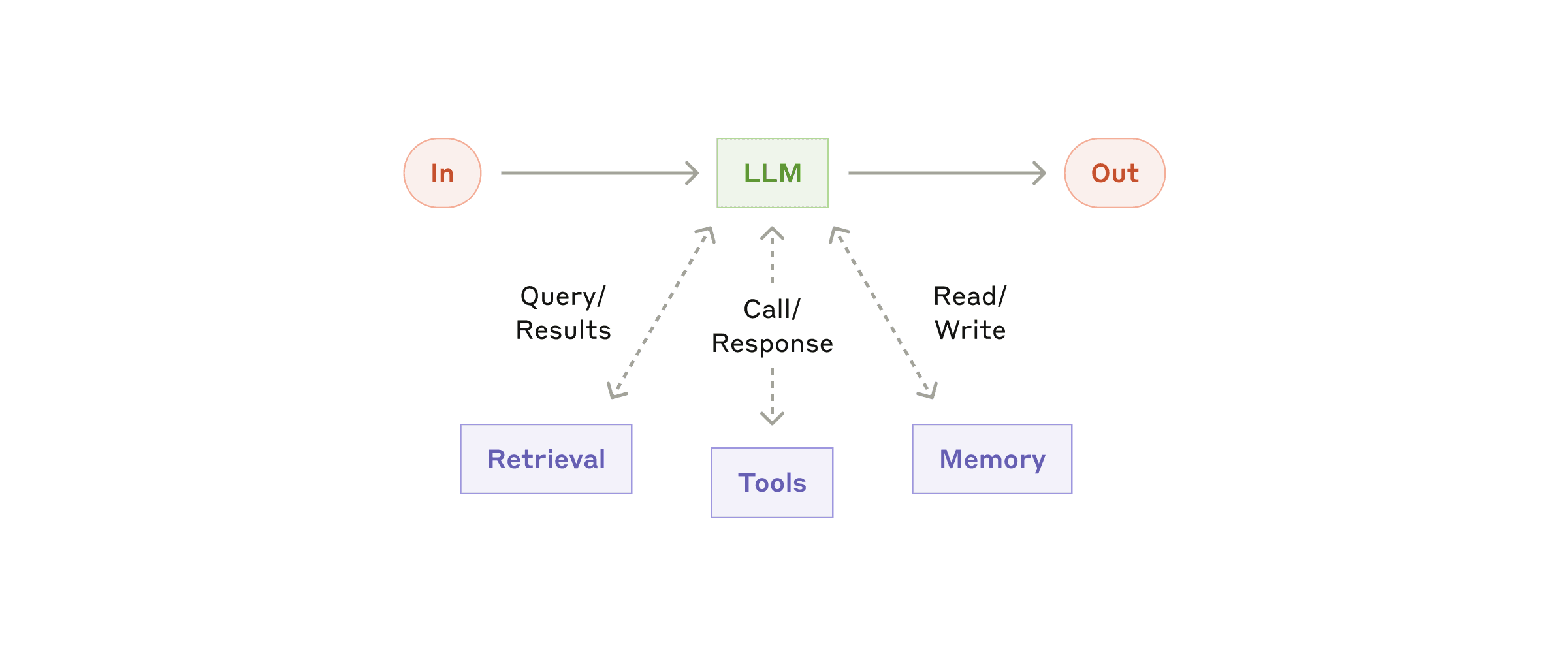

To build effective AI applications, a supporting cast of features is essential. This is where the concept of the Augmented LLM , depicted below, adds to the base model interaction with capabilities such as data retrieval, conversational memory, and tool calling. These capabilities allow you to bring your own data and external APIs directly into the model’s reasoning process.

Key to implementing this pattern in Spring AI are Advisors

A key feature of Spring AI’s ChatClient is the Advisor API. This is an interceptor chain that allows you to modify the ingoing prompt by injecting retrieve data and conversation memory.

You can read more about them in the section in the reference documentation.

Let’s now dive into each component of the Augmented LLM.

At the heart of retrieving data in AI applications is a database and vector databases in particular are the most common for this purpose. Spring AI provides a portable vetor store abstraction that supports 20 different vector databases, for Azure Cosmos DB to Weaviate.

A common challenge working with these databases is that each has its own unique query language for metadata filtering. Spring AI solves this with a portable filter expression language that uses a familiar SQL-like syntax. If you every reach the limits of this abstraction, you can fall back to native queries

Spring AI includes a lightweight, configurable ETL (Extract, Transform, Load) framework to streamline the process of importing your data into a vector store. It supports a wide range of input sources through pluggable DocumentReader components, including local file systems, web pages, GitHub repositories, AWS S3, Azure Blob Storage, Google Cloud Storage, Kafka, MongoDB, and JDBC-compatible databases. This makes it easy to bring content from virtually anywhere into your RAG pipeline, with built-in support for chunking, metadata enrichment, and embedding generation. You can read more about the ETL features in the section in the reference documentation.

Spring AI also comes with extensive support for the pattern of Retrieval Augmented Generation, or RAG, that enables the AI models to ground their responses based on the data you pass into it. You can start simple with QuestionAnswerAdvisor to inject relevant context into prompts, or scale up to a more sophisticated, modular RAG pipeline tailored to your application with the RetrievalAugmentationAdvisor.

You can read more about them in the section in the reference documentation.

For a tutorial on implementing RAG in your Spring AI applications, explore our guide to Retrieval Augmented Generation which walks through setting up vector stores, embedding documents, and creating effective retrieval pipelines with practical code samples.

Conversational history is an essential ingredient in creating an AI chat application. Spring AI supports this with the ChatMemory interface, which manages the storing and retrieving of messages. The MessageWindowChatMemory implementation maintains the last N messages in a sliding windows, updating itself as the conversation progresses. It delegates to a ChatMemoryRepository and we currently provide repository implementations for JDBC, Cassandra, and Neo4j, with more on the way.

An alternative is to use the VectorStoreChatMemoryAdvisor. Instead of just remembering the most recent messages, it uses vector search to retrieve the most semantically similar messages from past conversations.

You can read more about them in the section in the reference documentation.

For a walkthrough on implementing chat memory in your Spring AI applications, check out our guide to chat memory implementation which covers both basic and advanced memory patterns, including code examples for persistent storage options.

Spring AI makes it easy to extend what models can do through tools — custom functions that let AI retrieve external information or take real-world actions. Tool calling** (also referred to as function calling) was first widely introduced by OpenAI in June 2023 with the release of their function calling feature in the gpt-4 and gpt-3.5-turbo models.

Tools can fetch current weather, query databases, or return the latest news, helping models answer questions beyond their training data. They can also trigger workflows, send emails, or update systems—turning the model into an active participant in your application. Defining tools is simple: use the @Tool annotation for declarative methods, register beans dynamically with @Bean, or create them programmatically for full control.

You can read more about them in the section in the reference documentation.

For a walkthough on implementing tool calling in your Spring AI applications, see our guide to local tool calling which demonstrates how to create, register, and use tools with practical examples and best practices.

Using this technology to create an application is all fun and games, but how do you know it is working? Unfortunately, it isn’t as straightforward as writing traditional unit or integration tests and seeing if the tests are green. One needs to evaluate the response for the AI Model across a range of criteria. For example, is the answer relevant to the question asked? Did it hallucinate? Was the answer based on the provided facts?

To get a handle on this, one should start off doing so-called 'vibe checks'. As the name implies, this is manually reviewing the responses and using your own judgment to determine if the answer is correct. Of course, this is time-consuming, so there is an evolving set of techniques to help automate this process.

Spring AI makes it easy to check how accurate and relevant your AI-generated content is. It comes with a flexible Evaluator interface and two handy built-in evaluators:

RelevancyEvaluator – Helps you figure out if the AI's response actually matches the user’s question and the retrieved context. It’s perfect for testing RAG flows and uses a customizable prompt to ask another model, “Does this response make sense based on what was retrieved?”

FactCheckingEvaluator – Verifies whether the AI’s response is factually accurate based on the context provided. It works by asking the model to judge if a statement is logically supported by a document. You can run this using smaller models like Bespoke’s Minicheck (via Ollama), making it way cheaper than using something like GPT-4 for every check.

However, this isn’t a silver bullet. Clémentine Fourrier, lead maintainer of Hugging Face's Open LLM Leaderboard, warns that "LLMs as judges" are not a silver bullet. In her interview on the Latent Space Podcast she outlines key issues:

You can read more about Evaluation in the section in the reference documentation.

So best of luck! To get started, check out some nice articles that show the use of these evaluators.

When you're running AI in production, you need more than hope and good vibes—you need observability. Spring AI makes it easy to observe what your models are doing, how they’re performing, and what it’s all costing you.

Spring AI integrates with Micrometer to provide detailed telemetry on key metrics like:

Model latency – How long it takes for your model to respond (not just emotionally).

Token usage – Input/output tokens per request, so you can track and optimize costs.

Tool calls and retrievals – Know when your model is acting like a helpful assistant vs. just freeloading on your vector store.

You also get full tracing support via Micrometer Tracing, with spans for each major step in a model interaction. You can also get log messages that can be helpful for troubleshooting so that you can see what was the user prompt or the vector store response.

You can read more about Evaluation in the section in the reference documentation.

The Model Context Protocol (MCP) came on the scene in November of 2024. It took off like wildfire because it provides a standardized way for AI models to interact with external tools, prompts, and resources. MCP is a client-server oriented protocol and once you build an MCP server, you can easily adopt it in your application, no matter what programming language the MCP server was written in or what programming language the MCP client is written in.

This has really taken off in the tool space, though MCP isn't limited to tools. Now you can use 'out of the box' MCP servers for specific functionality like interacting with GitHub, without having to write that code yourself. From the AI tool perspective, it is like a class library of tools that you can easily add to your application.

The Spring AI team started support for MCP shortly after the specification was released and this code was then donated to Anthropic as the basis for the MCP Java SDK. Spring AI provides a rich set of features around this foundation.

Spring AI makes consuming Model Context Protocol (MCP) tools straightforward through its client-side starter module. By adding the `spring-ai-starter-mcp-client` dependency, you'll quickly connect with remote MCP servers. Spring Boot's auto-configuration handles the heavy lifting, so your client can invoke tools exposed by an MCP server without excessive boilerplate - letting you focus on building effective AI workflows. Spring makes it easy to connect to both stdio and HTTP-based SSE endpoints provided by MCP servers.

For a practical introduction, check out the MCP Client Example which demonstrates connecting to an MCP server that provides Brave web search - so you can add powerful search features right in your Spring app.

For a walkthrough on implementing Model Context Protocol in your Spring AI applications, see our guide to MCP which explains how to set up both client and server components, connect to external tools, and leverage the protocol for enhanced AI capabilities.

Spring AI simplifies creating MCP servers with its dedicated starter module and intuitive annotation-based approach. Add the spring-ai-starter-mcp-server dependency, and you can quickly transform Spring components into MCP-compliant servers.

The framework offers a clean syntax using the @Tool annotation to expose methods as tools. Parameters are automatically converted to the appropriate MCP format, and the framework handles all the underlying protocol details - transport, serialization, and error handling With minimal configuration, your Spring application can expose its functionality as both stdio and HTTP-based SSE endpoints.

You'll find several helpful examples in the spring-ai-examples repository - a good one to start with is the Spring AI MCP Weather STDIO Server.

Also check out projects in the Spring ecosystem that have started to embrace MCP with specialized servers:

These servers bring Spring's enterprise capabilities into the growing MCP ecosystem

It should come as no surprise that in an enterprise environment you want to have a measure of control over what data is presented to the LLM as context and what APIs are made available, especially those that modify data/state. The MCP spec addresses these concerns via OAuth. Spring Security and the Spring Authorization Server have got you covered. Spring Security Guru Daniel goes into detail into Securing MCP applications in his blog MCP Authorization in practice with Spring AI and OAuth2.

2025 is the year of the Agents.. The million-dollar question is 'define agent', well here is a shot at it :). At its core, an agent "leverages an AI model to interact with its environment in order to solve a user-defined task." Effective agents combine planning, memory, and actions to fulfill tasks assigned by users.

There are two broad categories of agents,

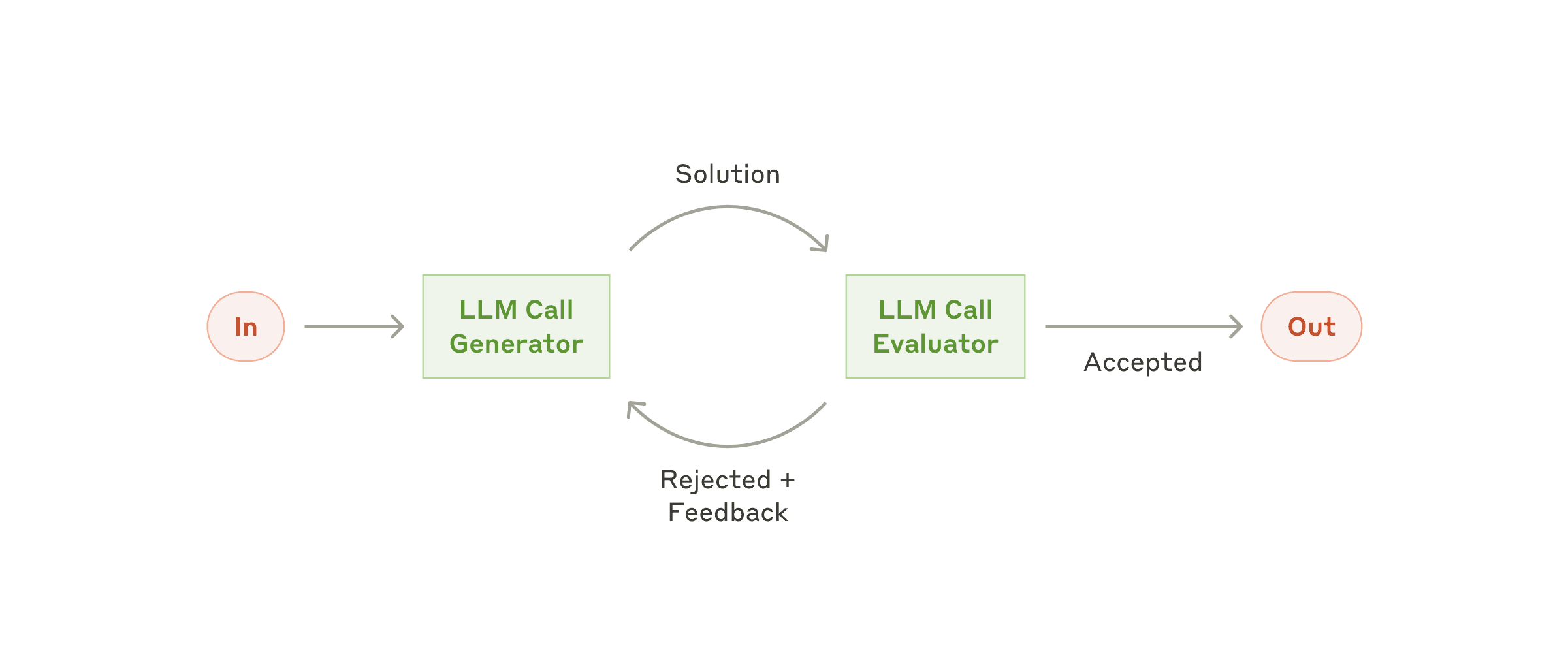

Workflows represent a more controlled approach where LLMs and tools are orchestrated through predefined paths. These workflows are prescriptive, guiding the AI through established sequences of operations to achieve predictable outcomes.

Autonomous Agents, by contrast, allow LLMs to autonomously plan and execute processing steps toward accomplishing tasks. These agents determine their own path, deciding which tools to use and in what order without explicit instruction.

While fully autonomous agents are appealing for their flexibility, workflows offer better predictability and consistency for well-defined tasks. The choice between these approaches depends on your specific requirements and risk tolerance.

Spring AI supports several workflow patterns that structure agent behavior: In the diagram below, each llm box is the "augmented llm" diagram shown earlier.

Routing – This pattern enables intelligent routing of inputs to specialized handlers based on classification of the user request and context.

Orchestrator Workers – This pattern is a flexible approach for handling complex tasks that require dynamic task decomposition and specialized processing

Chaining – The pattern decomposes complex tasks into a sequence of steps, where each LLM call processes the output of the previous one.

Parallelization – The pattern is useful for scenarios requiring parallel execution of LLM calls with automated output aggregation.

These patterns can be implemented using Spring AI's chat model and tool execution capabilities, with the framework handling much of the underlying complexity.

You can find out more in the Spring AI Examples repository and in the Building Effective Agents section of our reference documentation.

Spring AI also supports the development of autonomous agents through the Model Context Protocol. The incubating Spring MCP Agent project demonstrates how to create agents that:

Tanzu AI Solutions is available in Tanzu Platform 10 and above, and works best with Spring AI Apps:

For more information about deploying AI applications with Tanzu AI Server, visit the VMware Tanzu AI documentation and for more information on building Agentic AI applications, checkout the blogs

Recommended Reading on Agentic AI

Agentic AI: A New AI Paradigm Driving Business Success – Broadcom

Explores how agentic AI is redefining enterprise strategy by enabling autonomous decision-making, reducing friction, and driving business transformation.

AI Agents: Why Workflows Are the LLM Use Case to Watch – VMware Tanzu

Discusses why LLM-powered workflows represent a compelling real-world use case for AI agents, especially in enterprise software development and operations.

It has been truly gratifying to see the level of interest from the community to not only use Spring AI but to contribute back to it. The entire team is deeply humbled by the experience. A big shoutout to - Thomas Vitale (ThomasVitale). He has driven core features such as @Tools and RAG among other great contributions and bug fixes. Bravo!

I've curated the list of contributors, it is long. I'd like to make a geo-map showing the many people from so many parts of the world. Thanks!

AI merged the authors mentioned from the previous blogs, if I missed someone, I (and not the AI) apologize.

Spring AI 1.1 of course! Stay tuned!